Tag Archives: Lighting

UE4 Archviz / Lighting

Ben Snow: The Evolution of ILM’s Lighting Tools

ILM’s Iron Man 2 tools were not developed in a void, they have actually been developed over many years, and running in parallel of that development, especially in recent years is the personal career path of Ben Snow. A clean line can be drawn from films like Galaxy Quest and Pearl Harbor through films like Iron Manand Terminator Salvation to last year’s blockbusterIron Man 2. Along the way ILM invented or was on the cutting edge of most of the developments in CG lighting of the last decade. From ambient occlusion to energy conserving image based lighting (IBL), ILM has worked hard to perfect movie lighting and effects realism.

ILM’s Iron Man 2 tools were not developed in a void, they have actually been developed over many years, and running in parallel of that development, especially in recent years is the personal career path of Ben Snow. A clean line can be drawn from films like Galaxy Quest and Pearl Harbor through films like Iron Manand Terminator Salvation to last year’s blockbusterIron Man 2. Along the way ILM invented or was on the cutting edge of most of the developments in CG lighting of the last decade. From ambient occlusion to energy conserving image based lighting (IBL), ILM has worked hard to perfect movie lighting and effects realism.

IBL

Image Based Lighting

Much of what ILM has done with lighting has evolved around the concept of image based lighting. The approach is to light a 3D scene or 3D element to composite into a real live action scene by using global illumination. This is in contrast to light sources such as a computer-simulated sun or light bulb, which are more localized and come from a single source. In reality, any real source bounces off everything in the scene and thus the lighting of the CG element comes from not only that primary source but every other source. Images rendered using global illumination algorithms often appear more photoreal than images rendered using only direct illumination algorithms. However, such images are computationally more expensive and consequently much slower to generate. Thus it is that IBL is connected to global illumination and radiosity. It is also tied to the history of sampling that global illumination source. IBL uses lightprobes and HDRs to capture or sample the light on set to feed the IBL back at ILM. Ben Snow has had a front row seat at not only the development of this at ILM but also its implementation in a host of films. While much of the maths was understood early on, it was just completely impractical to immediately jump to a full IBL, HDR driven solution, and even as computer power caught up, that is not the whole story. Today there are still practical issues with capturing and deploying HDR environments. As Snow comments, “we have a great R&D department but it is often the demands of the film that drive what the R&D work on.” In this article we will plot the development of the lighting tools with a selection of ILM films. Of course this list is neither all the films Ben Snow worked on nor even all the films that ILM worked on that advanced the state of the art, but these few films below are all significant from a lighting model point of view in the evolution of ILM’s lighting system.

Star Trek Generations (1994)

Ben Snow joined ILM and the first film he worked on was Star Trek 7 or Generations as it was called at release. “When I got there I did a lot of training and I implemented half the RenderMan Companion (Steve Upstill’s The RenderMan Companion) – which is good if you have an opportunity to do, and they put me on developing the CG version of the enterprise B in that film.” Thus it was that Snow would start a recurring theme at ILM of producing realistic computer graphics versions of models or real rigid body objects with complex finishes, a task he is still exploring – albeit with some great creative detours – nearly some twenty years later.

Ben Snow joined ILM and the first film he worked on was Star Trek 7 or Generations as it was called at release. “When I got there I did a lot of training and I implemented half the RenderMan Companion (Steve Upstill’s The RenderMan Companion) – which is good if you have an opportunity to do, and they put me on developing the CG version of the enterprise B in that film.” Thus it was that Snow would start a recurring theme at ILM of producing realistic computer graphics versions of models or real rigid body objects with complex finishes, a task he is still exploring – albeit with some great creative detours – nearly some twenty years later.

Twister (1996)

The film was a significant one for Snow, perhaps more than just for the film’s success as it would lead to other key projects. Ben Snow worked on Twister in 1995/1996. The film was remarkable at the time for the amazing hand done matchmoving, existing as it did before the time of 3D tracking software. Snow recounts the story of Steven Spielberg sending the team a note commenting that the actual twisters at that stage looked almost right but not completely believable, “like a man who has dyed his hair,” but Spielberg also went on to be singularly impressed with the tracking, with Snow stating, “he just didn’t think they could get it that good.”

The film was a significant one for Snow, perhaps more than just for the film’s success as it would lead to other key projects. Ben Snow worked on Twister in 1995/1996. The film was remarkable at the time for the amazing hand done matchmoving, existing as it did before the time of 3D tracking software. Snow recounts the story of Steven Spielberg sending the team a note commenting that the actual twisters at that stage looked almost right but not completely believable, “like a man who has dyed his hair,” but Spielberg also went on to be singularly impressed with the tracking, with Snow stating, “he just didn’t think they could get it that good.”

Speed 2: Cruise Control (1997)

Snow was not directly on Speed 2 but on that film the team at ILM worked on faking expensive ray tracing by having a reflection occlusion map for the shiny windows of the cruise ship. This work was the precursor to ambient occlusion that the ILM team would develop and deploy on Pearl Harbor just a couple of years later.

Reflection Occlusion

The way the reflection occlusion pass worked was simple. It addresses the problem of reflections not being correctly occluded (blocked) when you use an all encompassing reflection environment. Imagine the ship inside a giant reflection map. This single reflection map would affect everything everywhere there is any material on the ship flagged as reflective. Once you have produced a map (or matte) that found all the self occluding or blocked by other objects on the ship, like a door blocking a window from seeing certain reflections, then you could use it to attenuate reflection in areas that shouldn’t have a reflection. The brilliance was that this map of where the reflections should or shouldn’t be, could be worked out once and then carried forward with the model. You produced a ‘hold out’ matte based on self ‘blocking’ or occlusion that did not need to be calculated for every frame. You rendered it for every frame, but as an additional pass the cost of rendering was not much, so long as you did not have to fully ray trace a complex model every frame to work it out (which is the expensive bit). All the ILM team needed to do was render this one solution in whatever position the ship was in and they had a poor man’s ray traced ship with reflective shiny surfaces and windows. Interestingly, reflection occlusion that was developed during Speed 2 was put into full production on Star Wars: Episode 1. While reflection occlusion was originally developed by Stefen Fangmeier, the reflection blur algorithm was implemented by Barry Armour. It was Dan Goldman who refined it for use in Episode I. With contributors from people like Will Anielewicz and Ken McGaugh. And building from this, McGaugh would go on to win a sci-tech award (see below).

The way the reflection occlusion pass worked was simple. It addresses the problem of reflections not being correctly occluded (blocked) when you use an all encompassing reflection environment. Imagine the ship inside a giant reflection map. This single reflection map would affect everything everywhere there is any material on the ship flagged as reflective. Once you have produced a map (or matte) that found all the self occluding or blocked by other objects on the ship, like a door blocking a window from seeing certain reflections, then you could use it to attenuate reflection in areas that shouldn’t have a reflection. The brilliance was that this map of where the reflections should or shouldn’t be, could be worked out once and then carried forward with the model. You produced a ‘hold out’ matte based on self ‘blocking’ or occlusion that did not need to be calculated for every frame. You rendered it for every frame, but as an additional pass the cost of rendering was not much, so long as you did not have to fully ray trace a complex model every frame to work it out (which is the expensive bit). All the ILM team needed to do was render this one solution in whatever position the ship was in and they had a poor man’s ray traced ship with reflective shiny surfaces and windows. Interestingly, reflection occlusion that was developed during Speed 2 was put into full production on Star Wars: Episode 1. While reflection occlusion was originally developed by Stefen Fangmeier, the reflection blur algorithm was implemented by Barry Armour. It was Dan Goldman who refined it for use in Episode I. With contributors from people like Will Anielewicz and Ken McGaugh. And building from this, McGaugh would go on to win a sci-tech award (see below).

Galaxy Quest (1999)

The visual effects producer and supervisor who had worked with Snow on Twister begged him to join theGalaxy Quest project. “I was not too sure,” says Snow, “but then I read the script and I was like ‘Oh my God, this is perfect, this is exactly what I want to do. Galaxy Quest was great as I like to mix it up and that film had character work, the Omega 13, and the star field. One of my jobs, I had on that film, was to revamp Mark Dippé’s star field generation, to allow us to paint galaxies.”

Snow was Associate Visual Effects Supervisor onGalaxy Quest but by some luck and scheduling adjustments Snow ended up in a more prominent role. At this time ILM was also doing Perfect Storm, “which had incredibly challenging stuff,” according to Snow. “So what happened is that Stefen (Fangmeier) transitioned to Perfect Storm (from Galaxy Quest) and Bill George took over and Bill is from an art direction and model shop background and (at that time) had not done a lot of CG creatures, so they gave me a lot of opportunities, to start with scenes from what Bill had established the look and then I could supervise a lot of creature work like the alien babies and the rock monster which meant that the film was in many ways my big break.”

Realistic lighting: the move to environmental real world lighting

On Galaxy Quest, Snow and the team got to introduce a new lighting model developed by Jonathan Litt and Dan Goldman. “They did all the leg work and put it together,” says Snow.  The rock monster was interesting as the creature was in full sun and hard to look like it was formed of natural rock. “It was difficult to reproduce all the bounce in this environment and still get realistic shadowing. We used a large number of lights of low intensity,” says Snow. He also confesses that they used tricks and hacks such as just lowering the opacity of shadows to try and make them mix in – “which can end up looking really fake.” “The rock monster was a interesting challenge and a bit of a frustration to be frank,” says Snow. He explains that in those days at ILM when they were trying to get a bit more of “an ambient bounce diffuse / diffuse illumination, where one surface would reflect light back onto another surface, it was tricky, you would use a combination of the standard ambient light, specular and diffuse illumination tools and what you would do is things like turn down the shadow opacity, which is horrible, and starts to look fake, so you would have multiple lights and thus only turn down the shadows a little bit. And all of this was just trying to approximate a broad area light (in an environment) which was all very expensive to compute.”With the rock monster, ILM tried very hard to make the CG rocks look real in full sun in a bright desert environment, “with light bouncing around all over the place, all done with just point source lights and it wasn’t easy,” says Snow. So it works and it’s a fun character in the film, but we came off film we all wished there were some better tools for this.”

The rock monster was interesting as the creature was in full sun and hard to look like it was formed of natural rock. “It was difficult to reproduce all the bounce in this environment and still get realistic shadowing. We used a large number of lights of low intensity,” says Snow. He also confesses that they used tricks and hacks such as just lowering the opacity of shadows to try and make them mix in – “which can end up looking really fake.” “The rock monster was a interesting challenge and a bit of a frustration to be frank,” says Snow. He explains that in those days at ILM when they were trying to get a bit more of “an ambient bounce diffuse / diffuse illumination, where one surface would reflect light back onto another surface, it was tricky, you would use a combination of the standard ambient light, specular and diffuse illumination tools and what you would do is things like turn down the shadow opacity, which is horrible, and starts to look fake, so you would have multiple lights and thus only turn down the shadows a little bit. And all of this was just trying to approximate a broad area light (in an environment) which was all very expensive to compute.”With the rock monster, ILM tried very hard to make the CG rocks look real in full sun in a bright desert environment, “with light bouncing around all over the place, all done with just point source lights and it wasn’t easy,” says Snow. So it works and it’s a fun character in the film, but we came off film we all wished there were some better tools for this.”

Pearl Harbor (2001)

Ben Snow Nominated, Oscar: Best Effects, Visual Effects for Pearl Harbor (2001). Shared With: Eric Brevig, John Frazier, Edward Hirsh So, in the rich tradition of visual effects, says Snow, “we approached the end of the 90s with a bunch of hacks. Of course ray tracing and global illumination were already in use in production and constant improvements were cropping up each year at Siggraph. But we were, and still are to an extent, in love with the look of our RenderMan renders, and were already dealing with scenes of such heavy complexity that ray tracing and global illumination were not practical solutions.”

Ambient Occlusion

Pearl Harbor director Michael Bay challenged the team at ILM to make their planes and ships look more realistic. While the film would still be a big miniatures shoot, “we were going to try and put motion control miniature ships into shots,” says Snow, there would be a host of other shots that this would not be feasible for. So much like on Galaxy Quest, ILM was faced with full sun CG elements into live action plates, and Snow and the team were determined to improve upon what had been done before to met Bay’s challenge.

Snow thought about Bay’s challenge and decided that environmental lighting would offer the best solution. So the team started looking at environment lighting with Mental Ray for doing this”, but Mental Ray was having real problems with the model sizes that ILM needed. Ken McGaugh (now a vfx supe at Dneg on John Carter of Mars) spent nearly a month, “trying to get get the ships to render in mental ray and we kept running into memory issues,” says Snow.

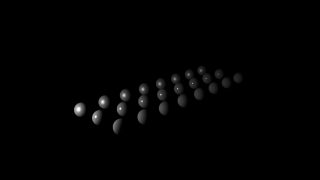

At the same time, Hayden Landis was discussing looking at lighting the ships with environment maps. Snow admits suggesting to Hayden Landis that he try and work on it and try and solve it – but that Hayden “was likely to have real problems with the shadows.” This was a solution that Snow had discussed “over the years” with Joe Letteri and Barry Armor some time before, but at that time lighting with environment maps would not produce correct self shadowing,- and without good shadowing the technique had never been used in an ILM film. Hayden Landis decided to explore lighting the planes with chrome spheres recorded from on set. Landis and McGaugh “put their heads together” and tried to find a solution. As mentioned above, on Speed 2 a reflection occlusion technique was used and the team members wondered if they might not be able to do something similar for Pearl, but just not with reflections, with full environment lighting. Hayden Landis, Ken McGaugh and Hilmar Koch then adapted the ideas from the Speed 2 reflection occlusion and developed ambient occlusion. This lead to the three of them being awarded the Academy Technical Achievement Award at the 2010 Oscars. While it has now become a standard tool, in 2001 the technique of ambient occlusion rendering was immediately seen as providing a new level of realism in synthesized imagery. Snow says that he normally doesn’t like to spend a lot of time comparing any lighting effects with a previous technology render of the same object (comparing the latest render with reality being a far better yard stick of success). “The shocker for me was when Hilmar got the rock monster from Galaxy Quest and rendered that with ambient occlusion, and I was like ‘Oh my God – if only we had this!”  Ambient occlusion was a way of trying to address global illumination and ray tracing but with far less expense. The key to this technique is to make a single ray-traced occlusion pass that is generated independently of the final lighting and final rendering. This special pass was used as part of the final solution in RenderMan, “but we were able to change our materials and lighting without having to calculate these passes,” say Snow. Surface shaders read the occlusion maps and then attenuate the environment to provide us with realistic reflections as shown in the second and third images from Pearl Harbor. Several other useful applications where also discovered for ambient occlusion. One of these is for creating contact shadows. Hiding shadow casting objects from primary rays but not secondary rays allows us to create contact shadows for objects that can then be applied in the render or composite. Around the time of Pearl Harbor we started seeing some very interesting developments in image based lighting with Paul Debevec’s presentations of using high dynamic range images and global illumination to make very realistic renderings. “At ILM we developed ways to improve the dynamic range of our composited images with the introduction of the openEXR format,” says Snow. But HDRs were still a post format and not an integrated on set tool for IBL. Lighting was not the only challenge on Pearl Harbor – there was complex tracking, massive CG models, smoke simulations and many other areas. At this time there was no full HDR pipeline being used on the film, and there were issues related to HDR imagery that the team was trying to fake. While bracketed exposures and bracketed spheres were shot, they were more for reference than full IBL. “We talked about it,” says Snow, “and during the production some of Paul Debevec’s stuff, like Fiat Lux, came out with some really impressive images, but by that stage we were already committed.” Interestingly, ILM did notice that there were interesting issues with fringing effects around highlights, as they started examining the real footage from principal photography. ”Hayden was, like, ‘look at this highlight!’ – it was frustrating that we can’t pump out numbers like that, so Hayden worked out with one of the compositors to add just a little sweetener in the composite that would bloom them out a bit and make it look more like what the team were seeing from the real Zero planes.” Snow would not really use HDRs heavily on any work at ILM, or even during his time at Weta on King Kong, until he returned to ILM to work on Iron Man several years later.

Ambient occlusion was a way of trying to address global illumination and ray tracing but with far less expense. The key to this technique is to make a single ray-traced occlusion pass that is generated independently of the final lighting and final rendering. This special pass was used as part of the final solution in RenderMan, “but we were able to change our materials and lighting without having to calculate these passes,” say Snow. Surface shaders read the occlusion maps and then attenuate the environment to provide us with realistic reflections as shown in the second and third images from Pearl Harbor. Several other useful applications where also discovered for ambient occlusion. One of these is for creating contact shadows. Hiding shadow casting objects from primary rays but not secondary rays allows us to create contact shadows for objects that can then be applied in the render or composite. Around the time of Pearl Harbor we started seeing some very interesting developments in image based lighting with Paul Debevec’s presentations of using high dynamic range images and global illumination to make very realistic renderings. “At ILM we developed ways to improve the dynamic range of our composited images with the introduction of the openEXR format,” says Snow. But HDRs were still a post format and not an integrated on set tool for IBL. Lighting was not the only challenge on Pearl Harbor – there was complex tracking, massive CG models, smoke simulations and many other areas. At this time there was no full HDR pipeline being used on the film, and there were issues related to HDR imagery that the team was trying to fake. While bracketed exposures and bracketed spheres were shot, they were more for reference than full IBL. “We talked about it,” says Snow, “and during the production some of Paul Debevec’s stuff, like Fiat Lux, came out with some really impressive images, but by that stage we were already committed.” Interestingly, ILM did notice that there were interesting issues with fringing effects around highlights, as they started examining the real footage from principal photography. ”Hayden was, like, ‘look at this highlight!’ – it was frustrating that we can’t pump out numbers like that, so Hayden worked out with one of the compositors to add just a little sweetener in the composite that would bloom them out a bit and make it look more like what the team were seeing from the real Zero planes.” Snow would not really use HDRs heavily on any work at ILM, or even during his time at Weta on King Kong, until he returned to ILM to work on Iron Man several years later.

Star Wars: Episode II (2002)

Ben Snow Nominated, Oscar: Best Visual Effects for Star Wars: Episode II – Attack of the Clones (2002). Shared With: Rob Coleman, Pablo Helman, John Knoll. ILM, in addition to its other roster of major feature films at the time, produced all the effects for the new Star Wars prequels. Ben Snow was nominated for an Oscar in 2002 for his role as one of the visual effects supervisors on Star Wars: Episode II. This film contained the factory chase sequence and, like many other sequences in the prequels, was so dominated by non-live action animation, that ILM’s work would be 100% on screen as fully animated shots and sequences, with only minimal live action green screen actors in close up shots. Visual effects was no longer in the films – it was the film.

Hulk (2003)

Again, Hulk was not a project Snow acted as a supervisor on, but it was extremely significant technically for the work that ILM would do later. In the film, the ILM team built projected environments based on simple geometry to place the digital Hulk character in the scene. While nowhere as advanced as one would see in the films to come, this was one of the first uses of projected environments on reconstructed geometry.

Again, Hulk was not a project Snow acted as a supervisor on, but it was extremely significant technically for the work that ILM would do later. In the film, the ILM team built projected environments based on simple geometry to place the digital Hulk character in the scene. While nowhere as advanced as one would see in the films to come, this was one of the first uses of projected environments on reconstructed geometry.

“On Hulk we started using Spheron rigs for capturing high resolution and high dynamic range images of the scene, and developed tools to recreate the set and project that captured image material onto rough proxy geometry for ray tracing and faking diffuse reflections,” explained Snow at Siggraph 2010. “This was quite an expensive process at the time and so wasn’t generally used in production at ILM until several years later.” This technique would become key in Iron Man a few years later. HDRs had first appeared in 1985 with Greg Ward’s Radiance file format, but even though ILM had published OpenEXR at the time of Hulk, wide scale, on-set HDR capture was not standard practice yet on film sets. Hulk was also significant for its deployment of sub-surface scattering. Hulk was not the first sub-surface scattering. In the late 90s ILM had tried it for an internal test for an unnamed Jim Cameron project, and the first major character to use sub-surface scattering was Dobby in Harry Potter and the Chamber of Secrets(2002). Snow would leave ILM on a sabbatical for a period and move to Weta to work on King Kong (2005), under his old friend Joe Letteri. Weta under Letteri on Lord of the Rings had moved heavily to sub-surface scattering lighting and trying to nail the look in one pass combined out of 3D and not do a multi-pass comp solution. “This was also very much the ILM approach,” explains Snow “and it still is.” Kong would also deploy advanced sub-surface scattering extremely effectively. Snow returned to ILM for Iron Man. “Well actually they gave me a few shots on Pirates of the Caribbean just to get back into the ILM pipeline,” he recalls.

RenderMan Pro Server

It is worth noting what Pixar’s RenderMan was doing through this period of 2000-2010. While ILM has source code level access to the renderer, they do not develop it and rely on the team at RenderMan. Clearly the lighting improvements of this decade also owe a debt to the RenderMan team, as ILM’s R&D team worked very closely with Pixar’s team to develop and then standardise many of the aspects and developments. From OpenEXR to Brick maps, the lighting advances moved in concert with the rendering technology and the ILM/RenderMan relationship is extremely strong, built on years of mutual research and development.

It is worth noting what Pixar’s RenderMan was doing through this period of 2000-2010. While ILM has source code level access to the renderer, they do not develop it and rely on the team at RenderMan. Clearly the lighting improvements of this decade also owe a debt to the RenderMan team, as ILM’s R&D team worked very closely with Pixar’s team to develop and then standardise many of the aspects and developments. From OpenEXR to Brick maps, the lighting advances moved in concert with the rendering technology and the ILM/RenderMan relationship is extremely strong, built on years of mutual research and development.  Snow points to the improvements like Brick maps, which allows for the storage of three dimensional volumetric textures “gives you the ability to bake your reflection information and use it when looking at lighting and other things. You’re doing a pre-pass as to what each surface looks like in 3D space but stored volumetrically.” This allowed things like Iron Man to “even be rendered.”

Snow points to the improvements like Brick maps, which allows for the storage of three dimensional volumetric textures “gives you the ability to bake your reflection information and use it when looking at lighting and other things. You’re doing a pre-pass as to what each surface looks like in 3D space but stored volumetrically.” This allowed things like Iron Man to “even be rendered.”

Transformers (2007)

Transformers was not a film Snow worked on, but ILM developed a variety of more realistic car finish materials for the characters, particularly a specular clear-coat ripple and some metal flakes materials. The nature of car paint is that there is actually the colored paint finish and then a clear coat on top of that. The Transformerslighting model worked to reproduce the effect of the clear coat and how it affected the highlights to give a realistic car ‘highlight ping’. While Transformers may appear as the same problem as Iron Man that will follow it, the car finishes on the transforming characters were significantly different from some of the brushed metal and anisotropic reflections that they generated which were very prominent on the Iron Man suit.

Iron Man (2008)

Nominated, Oscar: Best Achievement in Visual Effects for Iron Man (2008). Shared With: John Nelson, Daniel Sudick, Shane Mahan Iron Man followed on the heels of Transformers. “They had done some really cool things with car surfaces,” says Snow. “On Transformers they started gathering high dynamic reference, well should I say high-ish dynamic range, I think they were bracketing three exposures – and so that was the paradigm that we went intoIron Man with.”

Anisotropic metals

“Something I had been dissatisfied with on our work in the past was brushed metal surfaces and dull metal surfaces,” says Snow. “Chrome was pretty good, but not the anisotropic metals.”  Stan Winston Studios (now Legacy Studios) built suits and partial suits for Iron Man had to extended by ILM. “The suits were beautiful and we knew we’d have to add all these suits for when Iron Man was testing all his flying stuff,” recalls Snow. Legacy produced a set of color balls for the ILM team to hold into any shot, and also a set of metal paint samples that ILM could send off to try and get actual BRDF scans done from a lab in Russia or Japan. But Snow explains that while a BRDF scanner is quite good for many surfaces it does not scan any anisotropic surface well as the result is so directional: “If you look at the small little round rivets on the suit that had concentric brushed circles, and you get these little bow-tie looking reflections from them”. ILM learnt quite a lot about the anisotopic surfaces were doing and created an anisotropic specular function for the silver suit in particular. “So we added a function that would change the behavior of the highlight depending on the direction of the brushed direction on that part of the suit.” Snow does not feel that they totally nailed the anisotropic materials. “But that wasn’t the only problem, even though the metals were looking good, and Iron Man holds up really well and one or two of the shots I couldn’t tell later which bits of the suit we’d done – he was inside, then he was flying outside – he was in a range of lighting set ups and even though we set Iron Man up in a standard sand box environment, we were having to retool and tweek his material for each different lighting setup we were having to put him in.” The suit was highly successful, and the film scored the team another Oscar nomination. After Iron Man, there was a general group of people at ILM who were interested in exploring a more complete and real world solution to lighting that perhaps could reduce the need to re-rig the lighting and materials for every lighting setup. Snow describes the desire to “produce what might be a better, more robust lighting tool. Some of those discussions came up with the idea of a more normalized shading setup that was image based and more correct.”

Stan Winston Studios (now Legacy Studios) built suits and partial suits for Iron Man had to extended by ILM. “The suits were beautiful and we knew we’d have to add all these suits for when Iron Man was testing all his flying stuff,” recalls Snow. Legacy produced a set of color balls for the ILM team to hold into any shot, and also a set of metal paint samples that ILM could send off to try and get actual BRDF scans done from a lab in Russia or Japan. But Snow explains that while a BRDF scanner is quite good for many surfaces it does not scan any anisotropic surface well as the result is so directional: “If you look at the small little round rivets on the suit that had concentric brushed circles, and you get these little bow-tie looking reflections from them”. ILM learnt quite a lot about the anisotopic surfaces were doing and created an anisotropic specular function for the silver suit in particular. “So we added a function that would change the behavior of the highlight depending on the direction of the brushed direction on that part of the suit.” Snow does not feel that they totally nailed the anisotropic materials. “But that wasn’t the only problem, even though the metals were looking good, and Iron Man holds up really well and one or two of the shots I couldn’t tell later which bits of the suit we’d done – he was inside, then he was flying outside – he was in a range of lighting set ups and even though we set Iron Man up in a standard sand box environment, we were having to retool and tweek his material for each different lighting setup we were having to put him in.” The suit was highly successful, and the film scored the team another Oscar nomination. After Iron Man, there was a general group of people at ILM who were interested in exploring a more complete and real world solution to lighting that perhaps could reduce the need to re-rig the lighting and materials for every lighting setup. Snow describes the desire to “produce what might be a better, more robust lighting tool. Some of those discussions came up with the idea of a more normalized shading setup that was image based and more correct.”

Terminator: Salvation (2009)

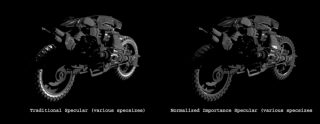

Snow went directly from Iron Man directly onto Terminator: Salvation. Terminator provided some interesting challenges. The last sequence is in a dark factory with flashes, fires and sparks, while the opening of the film is in harsh exterior lighting.  The team tried to produce CG vehicles that were to be recreated from actual vehicles in the film. Their test bed for this was a truck. The team rendered this and it compared very favorable with the live action truck. But Snow says “it was hard from just this to make the case that this was really the better (way to go).” So ILM decided to render the mototerminator with both the current and new render approaches. They then examined the two mototerminators comparing the new CG version with the original and also the old render model.

The team tried to produce CG vehicles that were to be recreated from actual vehicles in the film. Their test bed for this was a truck. The team rendered this and it compared very favorable with the live action truck. But Snow says “it was hard from just this to make the case that this was really the better (way to go).” So ILM decided to render the mototerminator with both the current and new render approaches. They then examined the two mototerminators comparing the new CG version with the original and also the old render model.  “It wasn’t like the leap on the rock monster before and after ambient occlusion,” says Snow, “but there was just this thing where the highlights read a little bit hotter, and it looks just this bit more realistic, and compared to the plate (photography) the highlights matched much more and they were blown out on the metals much more like the plate. It was a slightly better look, but it made for a much more robust image that you could manipulate in the comp and it would behave much more like the live action photography. The way it behaved in light was more realistic – it had much better fall for, for example.” Terminator used a new more normalized lighting tool, not on every shot but on a couple of big hero sequences. The move to the new tool did spark somewhat of a holy war at ILM. Many of the team were happy with the tools and tricks that they had, and in fairness were using very effectively. So in the end the approach on Terminator was a hybrid and many of the cheat tools that artists knew and loved were enabled so that people could still use those tools on the film, and tweak lights in a way that was not physically correct. And as Snow comments, “Hey, everything we do in the visual effects business is a bit of a cheat, and a little bit of magic, but if we can make the cheating less difficult or make it more physically correct in a way to make it less complex to do things then that should be the goal. Because it will be more realistic and easier for people to understand, but hey we have all built our careers on these cheats and there have been really good lighters making great shots before any of this.”

“It wasn’t like the leap on the rock monster before and after ambient occlusion,” says Snow, “but there was just this thing where the highlights read a little bit hotter, and it looks just this bit more realistic, and compared to the plate (photography) the highlights matched much more and they were blown out on the metals much more like the plate. It was a slightly better look, but it made for a much more robust image that you could manipulate in the comp and it would behave much more like the live action photography. The way it behaved in light was more realistic – it had much better fall for, for example.” Terminator used a new more normalized lighting tool, not on every shot but on a couple of big hero sequences. The move to the new tool did spark somewhat of a holy war at ILM. Many of the team were happy with the tools and tricks that they had, and in fairness were using very effectively. So in the end the approach on Terminator was a hybrid and many of the cheat tools that artists knew and loved were enabled so that people could still use those tools on the film, and tweak lights in a way that was not physically correct. And as Snow comments, “Hey, everything we do in the visual effects business is a bit of a cheat, and a little bit of magic, but if we can make the cheating less difficult or make it more physically correct in a way to make it less complex to do things then that should be the goal. Because it will be more realistic and easier for people to understand, but hey we have all built our careers on these cheats and there have been really good lighters making great shots before any of this.”

Iron Man 2 (2010)

Iron Man 2 adopted the new model as its base for all of the production. Snow believes that while the suit in the first Iron Man stands up really well, there were great benefits beyond just the subtle improvements that were provided by the new lighting model. Snow believes that one can more quickly get to a great starting point with the new lighting model and reducing time for set up and dramatically reducing relighting for every setup is “almost as valuable as anything.”

Energy conservation

The new system uses energy conservation which means that lights behave much more like real world lights. This means that the amount of light that reflects or bounces off a surface can never be more than the amount of light hitting the surface. For example, in the traditional world of CG the notion of specular highlights and reflection were separate controls and concepts, as were diffuse and ambient light controls. So under the previous model if you had three lights pointing down (three beams one from each spot to a surface below), if the specsize is varied, the specular from the point light doesn’t get darker as the specsize increases. “Indeed this is the specular model we have been using for years at ILM actually gets much brighter with grazing angles so the actual specular values are very hard to predict,” says Snow. Under the new energy conservation system, the normalized specular function behaves in the same way that a reflection does. As the specsize increases, the intensity of the specular goes down. Previously the system required the artist to know this and dial down the specular as the highlight got broader. While a good artist would know to do this, it had to be dialed in in look development, but different materials on the same model might behave differently, and of course objects would behave differently in different lighting environments and would have to be hand tweaked in each setup.

Another example is fall-off. Snow jokes that many artists just turn off fall-off. “As FX supervisors we are always asking, do you have fall-off on? – you need to turn fall-off on.” Of course, many artists would have an approximation for this in their artists tool box but most times this is a simple inverse distance equation. But if a surface is shiny then a highlight should not drop off as much or as fast as a duller surface, so correct fall-off is not even just a function of distance it is material related.

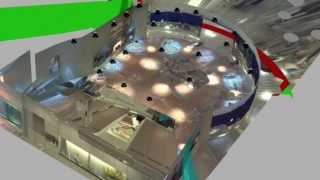

The Iron Man 2 pipeline also translated into a more image based lighting system. By Iron Man 2 the team was much more accurately recording the HDRs on set and doing much more accurate HDRs at multiple points on the set. This lead to combining several technologies and the work of several departments to produce HDR IBL environments build with mutiple HDRs.

In parallel with the new energy conservation lighting model, many of the scenes were full rebuilt with simple geometry but now mapped with multiple HDRs taken from several points around a room. These multiple HDRs combine so that a character can move around the room and remain correctly lit. It also goes further. If a character in a scene would normally cast a shadow then that shadow feeds into the environment HDR, thus a character may reduce the radiosity effect cast back from an HDR onto a second character or suit, as was the case with the Stark fight sequence. The HDR environment is not just an environment sphere anymore that lead to the ambient occlusion solution, but a real environment that interacts with the CG characters from a lighting perspective.

Note the ball reference at the bottom

Note the ball reference at the bottomThe difficulty is that each HDR is inherently static – the bracketed nature of the process means that you get a series of static HDRs. Snow and the team explored several options to over come this, as this had also been a big problem with the HDRs taken in the factory end fight in Terminator. In the case of the fight that happens in Tony Stark’s house, the fireplace should be flickering, so ILM tried taking multiple exposures and combining them – and as fire is fairly forgiving, this worked for that sequence. In the case of the major opening stage/dance sequence where Iron Man ‘drops in’ to the Stark Expo – it was much more difficult. The normal process for an HDR is to shoot a set of bracketed exposures on a Canon 1Ds using an 8mm fisheye lens on a Nodal Nija head mounted on the tripod. ILM’s data team then shoot in manual exposure mode with manual focus and image stabilization off : 7 exposures, 3 stops separation, with the center exposure set at 1/32 sec Aperture, f/16, and ISO 100. The camera normally has an added 0.6 ND (2-stop) filter to the lens for exterior work. For the Stark Expo they tried taking that 8mm lens and mounting it on a VistaVision camera (“While it doesn’t give HDR, film gives a better range,” explains Snow). They also tried comparing this with just shooting the 8mm on the Canon, but in video mode. But the 8 bit file from the Canon video mode reduces the usability of this. In the end, as an industry right now we just don’t have a good solution for shooting multiple exposures or HDR moving footage, to produce 360 HDRs – yet along then sync and stitch these together. To finish on a positive note, Snow himself lot some shots in the new IBL Energy conserving system and found it a much better way to work, and an easy system to deal with, especially as the system much more models what actually happens on set when a DOP moves a light, or adjusts a light. The new ILM system, by being more physically correct, is not only producing technically better results but allow great creative freedom, believes Snow.

Ben Snow, three time Oscar Nominee

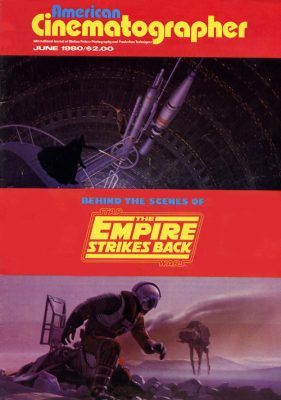

Ben Snow grew up in the 70s and 80s, a self proclaimed film enthusiast “with a particular obsessed with monster movies and horror movies,” he says. Snow made films, ran a film group and grew up in regional Australia trying to soak up as much information from Hollywood as he could. As a young man he bought Cinefantastique, and then later Cinefex and became a special effects fan, reading avidly Issue #732 of American Cinematographer in June 1980, and its interview with a young Dennis Murren working on The Empire Strikes Back. Ironically, some 22 years later Ben would be nominated for an Oscar with Rob Coleman, Pablo Helman and John Knoll for Star Wars Episode II. Actually Ben met John Knoll on the set of his first film working for ILM, which was Star Trek Generations. Snow was a computer effects artist and Knoll the show’s visual effects supervisor, a fact that did not stop Snow from strolling up to Knoll on set, as Knoll was taking VistaVision plate shots of the Enterprise B for textures and explaining to Knoll how he should photograph models for the new requirements of digital CG animation. Snow, who just believed Knoll came from a photographic background and ILM’s camera department, did not discover until several weeks later “when we were in at work on a OT Saturday and I was having lunch with John and some guys, that John mentioned that he had actually been part of the genesis of Photoshop!” Ben Snow had joined ILM from working in London first as a runner and then as one of only four members of the new MPC computer graphics department, “as a system’s guy.” After MPC in London he returned to Australia to Crows Nest in Sydney where he was to set up the 3D department for Conja. “3D department!” he laughs, “it was more like – get a box and have a 3D artist work on it.” He joined ILM by crashing an ILM party at the Nixon Library Siggraph 1994 in the year Jurassic Park was released. ”There was a killer party at the Nixon library organized by Steve ‘Spaz’ Williams and Mark Dippé – they had Timothy Leary talking – and it was like – Oh my god – this is Hollywood!” At that party he ran into Geoff Campbell, who had been a modeler at MPC and taught Ben Snow Alias 1.0 in the UK. But at that time Campbell was at ILM. He pushed Snow to apply and a few days later Snow was on a conference call with Doug Smythe, Joe Letteri, and Steve Rosenbaum. To hear more about Ben’s career and how he joined ILM and about the films above, listen to the new fxpodcast of an interview between Ben and Mike Seymour. This podcast was recorded in the lead up to the Oscar bake-off of 2011.

Ben Snow grew up in the 70s and 80s, a self proclaimed film enthusiast “with a particular obsessed with monster movies and horror movies,” he says. Snow made films, ran a film group and grew up in regional Australia trying to soak up as much information from Hollywood as he could. As a young man he bought Cinefantastique, and then later Cinefex and became a special effects fan, reading avidly Issue #732 of American Cinematographer in June 1980, and its interview with a young Dennis Murren working on The Empire Strikes Back. Ironically, some 22 years later Ben would be nominated for an Oscar with Rob Coleman, Pablo Helman and John Knoll for Star Wars Episode II. Actually Ben met John Knoll on the set of his first film working for ILM, which was Star Trek Generations. Snow was a computer effects artist and Knoll the show’s visual effects supervisor, a fact that did not stop Snow from strolling up to Knoll on set, as Knoll was taking VistaVision plate shots of the Enterprise B for textures and explaining to Knoll how he should photograph models for the new requirements of digital CG animation. Snow, who just believed Knoll came from a photographic background and ILM’s camera department, did not discover until several weeks later “when we were in at work on a OT Saturday and I was having lunch with John and some guys, that John mentioned that he had actually been part of the genesis of Photoshop!” Ben Snow had joined ILM from working in London first as a runner and then as one of only four members of the new MPC computer graphics department, “as a system’s guy.” After MPC in London he returned to Australia to Crows Nest in Sydney where he was to set up the 3D department for Conja. “3D department!” he laughs, “it was more like – get a box and have a 3D artist work on it.” He joined ILM by crashing an ILM party at the Nixon Library Siggraph 1994 in the year Jurassic Park was released. ”There was a killer party at the Nixon library organized by Steve ‘Spaz’ Williams and Mark Dippé – they had Timothy Leary talking – and it was like – Oh my god – this is Hollywood!” At that party he ran into Geoff Campbell, who had been a modeler at MPC and taught Ben Snow Alias 1.0 in the UK. But at that time Campbell was at ILM. He pushed Snow to apply and a few days later Snow was on a conference call with Doug Smythe, Joe Letteri, and Steve Rosenbaum. To hear more about Ben’s career and how he joined ILM and about the films above, listen to the new fxpodcast of an interview between Ben and Mike Seymour. This podcast was recorded in the lead up to the Oscar bake-off of 2011.